Visual Question Answering (VQA)

Visual Analytics

Visual Analytics

| January 5, 2015

Visual Analytics

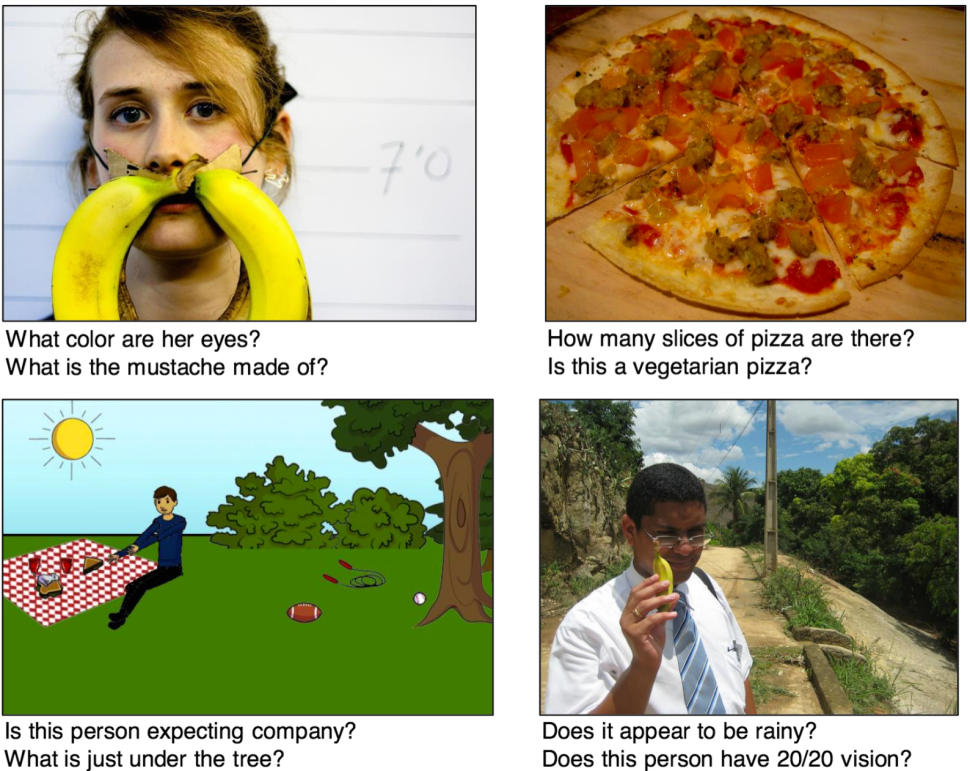

Visual Question Answering is a new dataset containing open-ended questions about images.

Given an image and a free-form, natural language question about the image (e.g., “What kind of store is this?”, “How many people are waiting in the queue?”, “Is it safe to cross the street?”), the machine’s task is to automatically produce a concise, accurate, free-form, natural language answer (“bakery”, “5”, “Yes”).

Answering any possible question about an image is one of the ‘holy grails’ of semantic scene understanding. VQA represents not a single narrowly-defined problem (e.g., image classification) but rather a rich spectrum of semantic scene understanding problems and associated research directions. Each question in VQA may lie at a different point on this spectrum: from questions that directly map to existing well-studied computer-vision problems (“What is this room called?” = indoor scene recognition) all the way to questions that require an integrated approach of vision (scene), language (semantics), and reasoning (understanding) over a knowledge base (“Does the pizza in the back row next to the bottle of Coke seem vegetarian?”). Consequently, our research effort maps to a sequence of waypoints along this spectrum. Motivated by addressing VQA from a variety of perspectives, this research program generates new datasets, knowledge, and techniques in:

- pure computer vision

- integrating vision + language

- integrating vision + language + common sense

- interpretable models and

- combining a portfolio of methods

In addition, novel contributions are being made to:

- train the machine to be curious and actively ask questions to learn

- using VQA as a modality to learn more about the visual world than what existing annotation modalities allow and

- train the machine to know what it knows and what it does not

VQA is directly applicable to a variety of applications of high societal impact that involve humans eliciting situationally-relevant information from visual data; where humans and machines must collaborate to extract information from pictures. Examples include aiding visually-impaired users in understanding their surroundings (“What temperature is this oven set to?”), analysts in making decisions based on large quantities of surveillance data (“What kind of car did the man in the red shirt drive away in?”), and interacting with a robot (“Is my laptop in my bedroom upstairs?”). This project has the potential to fundamentally improve the way visually-impaired users live their daily lives, and revolutionize how society at large interacts with visual data.

Publications

In Proceedings of the International Conference on Computer Vision (ICCV), (pp ), 05/2015

Citatations: BibTeX

In the News