Sanghani Center Student Spotlight: Xiaolong Li

Xiaolong Li is a Ph.D. student in computer engineering. His main interest is in computer vision, with a focus on deep 3D representations learning for dynamic scene understanding.

“Building robust smart algorithms will help machines understand the 3D world around us,” Li said.

“As human beings, we use our hands to interact with different objects like tools, and complete physical tasks,” Li said. “But if we had a depth camera that could capture points on the visible surface of human hands and the grasped object, we could estimate the pose of both human hands and objects, that is, the 3D locations of the hand joints, together with the location and orientation of the object, robustness under occlusions and generalizability to novel objects or novel hands.”

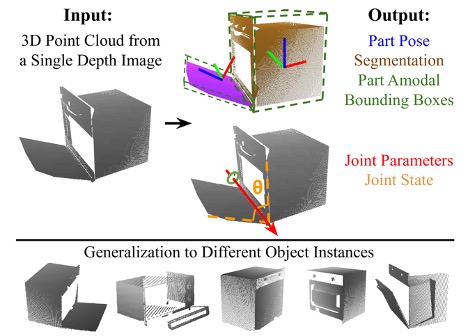

Li, who is advised by A. Lynn Abbott, presented his collaborative paper Category-Level Articulated Object Pose Estimation at the virtual 2020 Conference on Computer Vision and Pattern Recognition (CVPR) last June.

The paper, addressing the task of category-level pose estimation for articulatedobjects from asingle depth image, presented a novel category-level approach that correctly accommodates object instances previously unseen during training. The study introduced Articulation-aware Normalized Coordinate Space Hierarchy (ANCSH) — a canonical representation for different articulated objects in a given category. By leveraging the canonicalized joints, the researchers were able to demonstrate improved performance in part pose and scale estimations using the induced kinematic constraints from joints; and a high accuracy for joint parameter estimation in camera space.

“The Sanghani Center has provided me with the opportunity to collaborate with excellent researchers from diverse backgrounds,” Li said.

Li earned a bachelor’s degree in electrical engineering from Huazhong University of Science and Technology in China.

Projected to graduate in Spring 2022, he would like to find a position where he can devote his work to augmented reality (AR) and virtual reality (VR) research for smart agents interacting with a 3D environment.