Semi-Supervised Learning of Cyberbullying and Harassment Patterns in Social Media

Network Science

Network Science

| June 1, 2015

Network Science

Semi-Supervised Learning of Cyberbullying and Harassment Patterns in Social Media develops algorithms that identify detrimental online social behaviors.

The goal of this research project is to develop algorithms for identifying detrimental online social behavior on the Internet. A growing majority of human communication occurs over Internet services, and advances in mobile and connected technology have amplified individuals’ abilities to connect and stay connected to each other. Moreover, the digital nature of these services enables them to measure and record unprecedented amounts of data about social interactions. Unfortunately, the amplification of social connectivity also includes the disproportionate amplification of negative aspects of society, leading to significant phenomena such as online harassment, cyberbullying, and hate speech. In the proposed research, we will develop automated, data-driven methods for identification of harassment and cyberbullying. These methods could enable technologies that mitigate the harm and toxicity created by these detrimental behaviors.

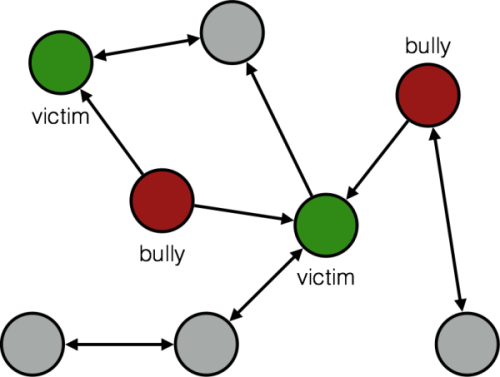

Analysis of such behavior requires understanding of not only language, but also social structures. The complexities underlying these behaviors make automatic detection difficult for existing computational approaches. For example, given a corpus of social media communication records, keyword searches or sentiment analyses are insufficient to identify instances of harassment. Instead, it is necessary to understand who is harassing whom and the social roles of the instigators and the victims. Using current tools, effective analysis therefore requires human experts with a working comprehension of societal norms and patterns.

The algorithms we will develop and investigate in this research project will encode such complexities into efficiently learnable models. These algorithms are often classified as relational learning models, because the data and concepts involve relationships. The relational models will be trained in a semi-supervised manner, where human experts can provide high fidelity annotations in the form of specific instances and examples of bullying or key phrases that are highly indicative of bullying. The algorithms will then extrapolate from these expert annotations to find other likely key-phrase indicators and specific instances of bullying. These computational models will be reminiscent of methods used for recommendation engines, retargeting these often commercially motivated technologies toward social welfare.